Neural architecture search (NAS) is also sometimes called “AutoML” or “meta-learning”, in that it searches over a space of possible neural architectures, but it is really more like an application of the §meta_learning method.

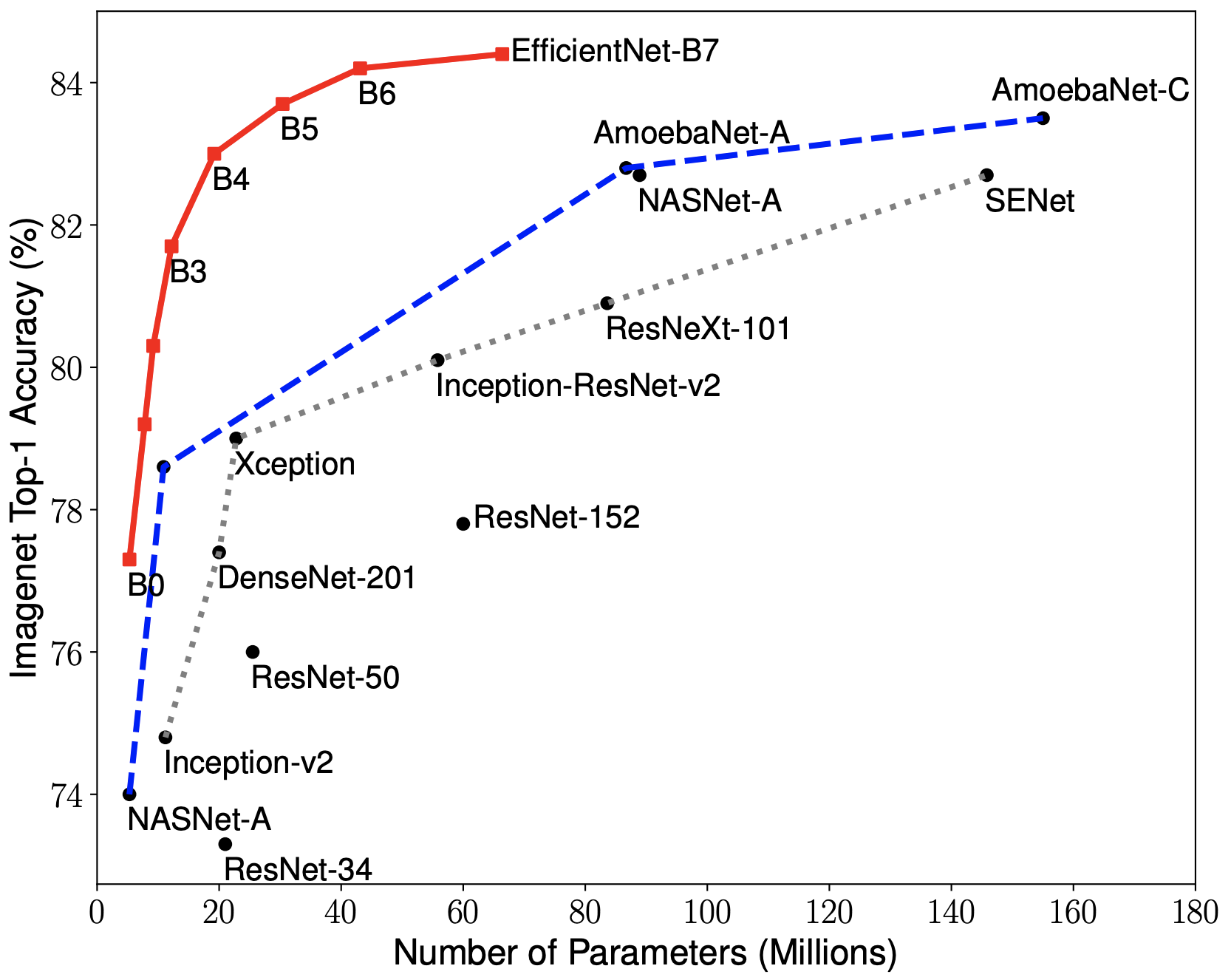

ResNet was further extended with manual designs such as DenseNet, and then surpassed by NAS techniques such as AmoebaNet and EfficientNet. The timeline of image classification benchmark advancement can be found on paperswithcode.

Examples:

- §EfficientNet, AmoebaNet